I was asked to give two talks at the Boston Area Haskell User Group for this past Tuesday. The first was pitched at a more introductory level and the second was to go deeper into what I have been using monoids for lately.

The first talk covers an introduction to the mathematical notion of a monoid, introduces some of the features of my Haskell monoids library on hackage, and starts to motivate the use of monoidal parallel/incremental parsing, and the modification use of compression algorithms to recycle monoidal results.

The second talk covers a way to generate a locally-context sensitive parallel/incremental parser by modifying Iteratees to enable them to drive a Parsec 3 lexer, and then wrapping that in a monoid based on error productions in the grammar before recycling these techniques at a higher level to deal with parsing seemingly stateful structures, such as Haskell layout.

- Introduction To Monoids (PDF)

- Iteratees, Parsec and Monoids: A Parsing Trifecta (PDF)

Due to a late start, I was unable to give the second talk. However, I did give a quick run through to a few die-hards who stayed late and came to the Cambridge Brewing Company afterwards. As I promised some people that I would post the slides after the talk, here they are.

The current plan is to possibly give the second talk in full at either the September or October Boston Haskell User Group sessions, depending on scheduling and availability.

[ Iteratee.hs ]

is said to be

is said to be  if it is naturally isomorphic to

if it is naturally isomorphic to  .

. play the role of

play the role of  with:

with: and

and  consists of a pair of functors

consists of a pair of functors  , and

, and  and a natural isomorphism:

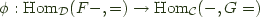

and a natural isomorphism:

the left adjoint functor, and

the left adjoint functor, and  the right adjoint functor and

the right adjoint functor and  an adjoint pair, and write this relationship as

an adjoint pair, and write this relationship as